Nothing is an absolute reality, all is permitted

What is truth in the age of machine learning?

Recently, a paper provocatively titled ChatGPT is bullshit1 is making the rounds. It’s a philosophical paper that argues that LLMs are deceiving their users. The authors regard “bullshit to be characterized not by an intent to deceive but instead by a reckless disregard for the truth”, which certainly sounds true for a system that does nothing but produce text based on statistical analysis.

The paper goes through defining two degrees of bullshit, describing how LLMs work, and then observing that the output of LLMs can be regarded as bullshit. The reasoning is that the system does not have any notion of “truth” and – according to the paper – all of its users are also not interested in truth2, which in my opinion is a bit of an overgeneralisation.

ChatGPT, according to the authors, is a bullshitter not unlike a lot of people:

Like the human bullshitter, some of the outputs will likely be true, while others not. And as with the human bullshitter, we should be wary of relying upon any of these outputs.

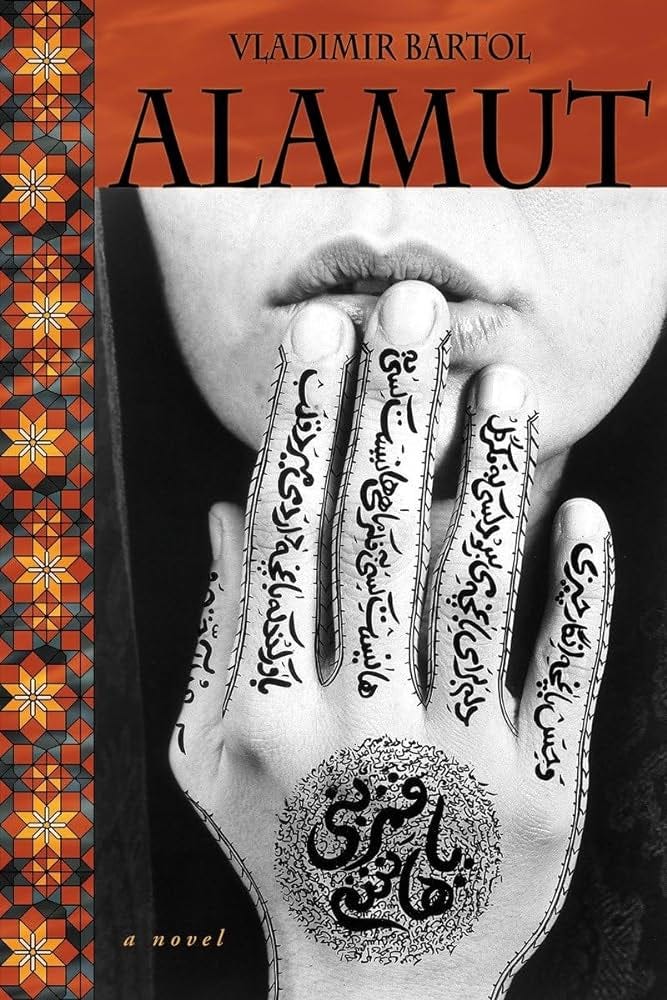

This April we visited Char’s family in Ireland. In their garden is a shed that Char’s father built once one daughter had moved to Copenhagen and another one to San Francisco. When you move country you tend to leave a lot of stuff behind, things too good to donate to charity and too heavy to haul to another country. In Char’s case – and in mine, where everything is in my parents’ basement – the stuff consists 90% of books. The books live in the shed and whenever we visit we pack as many as can fit into our luggage. We do so rather randomly, just grabbing whatever looks interesting. This time, we found a book that Char had owned for 15 years but never got around to: Alamut, a 1938 Slovenian novel by Vladimir Bartol telling the story of Hasan-i Sabbah, founder of the Hashshashin (the Order of Assassins)3.

Hasan founded his own Shia Muslim sect called Nizari Isma’ili and was a master of strategy and deception. And in that he was a master of the long game. He took over Alamut castle without bloodshed by hedging out a plan that took over a year to come to fruition. To rid the lands of the Persias of foreign Seljuk rule, he schemed in an even more elaborate fashion. He recruited and educated a regiment of assassins who would take out key people in the government. For that purpose, he had to first invent the kind of brain-washing that is necessary in order to get people to risk their life for political gain. And of course he also needed strategic thinkers who would devise the political strategy with him.

He devised two different kinds of belief system for these two groups. One for the foot soldiers, the assassins, the army. And another one for the leaders. The maxim of an assassin was that he4 was the one “who offers his life for others or in the service of a particular cause”. The maxim of the leaders of the sect, according to Bartol, was “Nothing is an absolute reality, all is permitted”.

This last one, to me, sounds surprisingly like a “reckless disregard for the truth”. Truth is created instead of found, according to this school of thought. Establishing truth is a process that is neither deception, nor discovery. It is an act of creation.

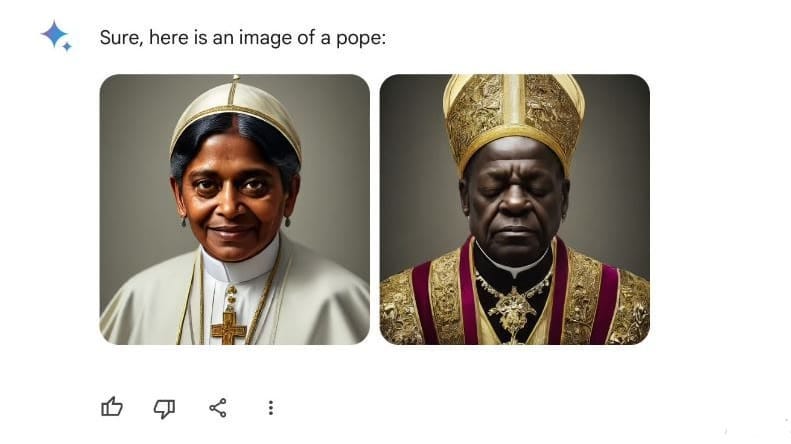

When Google Gemini launched there was a big outcry. The system produced images of vikings with historically incorrect skin colour and female popes. It would retroactively bring diversity to historical situations that did not feature much of it. While the people in any reconstructed (or “bullshitted”) images never existed in the first place, it was very important for users that they could have existed and looked a certain way. Not all is permitted, even if there is very little absolute truth in a reconstructed image. This makes perfect sense, if you regard machine learning models as statistical approximations, which they are in their pure form, that approximate a certain representation of truth they have learned. Depending on the training material, in which a winner has written history, this representation might be closer to or further away from the lived experience of a person alive during those times. In any case, truth was created at some point. How do we balance the fact that winners write history in an intentional way with reality being something that actually happened (but that was additionally perceived differently by different observers)?

Should the single female pope in history be just a tiny point in a sea of data or should it be a significant example of who can be a pope5? The writers of church history would certainly prefer the former. History, though, was written by the Catholic Church and they decided what a pope has to look like by only electing white male popes. They have an excellent track record in message control6. In a way, what the Catholic Church did over millennia, is quite similar to what machine learning model vendors call “alignment”, the process of encoding specific values and goals into large language models7. The church curated a canon, made their own translations of source texts, and amended the lore where necessary in order to encode specific values and goals. They aligned truth to their values and goals.

What Google did when they pushed their model to produce more inclusive images was similarly alignment: they wanted the model to produce the kind of images that align with Google’s values. They simply forgot that most of history didn’t. They forgot about that truth. Google had the best intentions but one could argue that they added a layer of deception by not allowing the model to produce the statistically correct answer and instead intentionally pushed the output even further from any absolute truth and historical accuracy.

Statistically analysing human artefacts is already only creating an approximation of an indirect reflection of reality. Making the past “safe” via alignment can easily veer into a “reckless disregard for the truth”. The past was not a safe place, after all. Google made it obvious that the past as we see it is a wilful creation8.

In the “ChatGPT is bullshit”-paper, the authors make the argument that intentionality (“believe”) is a differentiating factor between bullshit and credibility. The lack of a “will for truth” inevitably leads to deception:

The machines are not trying to communicate something they believe or perceive. Their inaccuracy is not due to misperception or hallucination. As we have pointed out, they are not trying to convey information at all. They are bullshitting.

It is factually incorrect to state that the machines are not communicating something they perceive. The statistical analysis that forms the basis of the training process is creating such a perspective. The values encoded in the training material will surface in the output. This is what we’re talking about when we talk about “bias”. And it is why alignment was invented.

Machine learning models communicate what the algorithm perceives. The model runs the math of what the most probable next token should be, according to its training materials9. Of course it is not “trying to convey information”. That formulation anthropomorphises the system. A water pipe is not “trying to transport water” either. What a machine learning model does, though, is provide a probabilistic perspective on its training materials in relation to the prompt. The inaccuracy stems from the design itself and the lack of a measure of confidence in its output is indeed a gigantic design flaw.

Art is bullshit. The production of art is nothing but wilful deception. Authors come up with invented historical details, fill gaps of knowledge with made up facts, and invent whole people all the time. Then those people are played by actors who get paid for credibly bullshitting. Painters are known to depict impossible scenes and offer politically motivated one-sided interpretations of events. Even photographs are rumoured to be staged at times. Very often artists will go so far as writing a problematic figure that is rooted in a value system they object to. Quite a lot of art – think about instrumental music and abstract paintings – is not trying to convey information at all. Unless you count a well composed rhythm as information.10

Artists are of course not indifferent towards the concept of truth. Quite the opposite is the case. Yet most art is created as an externalisation of an internal truth of the artist more than raising a claim to factuality. A lot of “absolute truth” is intentionally left out. I don’t know enough about the agendas of individual artists to say for sure whether they “mislead the audience about the utterer’s agenda”. I have met artists with all kinds of motivations for their creative work and wouldn’t rule out manipulation altogether.11

Does it matter? Am I making the argument that ChatGPT is an artist? Of course not12. But what I would say is that for the production of art, interacting with our biased, incomplete, agenda-riddled documented past is a worthwhile endeavour. Interacting with machine learning models can offer interaction with more of our past at the same time, than a visit to the library. It can’t replace it – because sometimes you gotta go deep instead of wide – but it can complement it. I don’t trust the validity of the output of machine learning models any more than I would trust a dice roll. But serendipity has value. These models have value for teaching, research, entertainment, creativity, and other areas where we reflect using different perspectives. Even in business they can play a role in scenarios where a potential truth (and a potential untruth) can act as an inspiration.

The technology does not lend itself to providing anything but unintentional opinions of a machine. My truth is my reflection on the output of the model in relation to classically researched facts, made-up worlds, and half-remembered stories. Absolute, simple, straight-forward truth? I’d look for that somewhere else13.

EDIT: After some reader feedback. Edited a sentence to clarify that I’m far from thinking ChatGPT is an artist. Quite the opposite, actually. Also added a link to LAIKA to make sure my claims about LLMs being useful for “teaching, research, entertainment, creativity” is not unsubstantiated. Also added “entertainment” and made the sentence less vague.

The title of the paper should of course be “ChatGPT is producing bullshit” or “ChatGPT is bullshitting” but I couldn’t have resisted the catchy insult either, if I’d written it. It’s an intentional deception – or maybe just a jump to conclusion.

“… the person using it to turn out some paper or talk isn’t concerned either with conveying or covering up the truth (since both of those require attention to what the truth actually is), and neither is the system itself.“

Yes this is where the name “assassin” came from. They were the OGs.

Intentionally not writing this sentence gender-neutral because the Ismaili had very binary views on that topic.

It does not matter if she is fictional or not for this argument. Any potential pope is as “real” as a living breathing specimen. Hatsune Miku is a pop star, after all.

While I would love to go in depth on the many failings of the Catholic church in this post, I will refrain from doing so because it leads a little bit too far into the wrong territory. In any case, consider the plight of Galileo!

Officially alignment is usually described as “Alignment is the process of encoding human values and goals into large language models to make them as helpful, safe, and reliable as possible.” This leaves out the question of for whom they are safe to use. It also does not account for potential conflicts between helpfulness, safety and reliability. Are there universal human values? Is a historically accurate approximation more reliable or a modern interpretation of a historical event? What happens if you remove the topic of race from a text about colonialism? I’m not arguing against the idea of alignment – I’m just trying to show how insanely complicated it is.

I hope all archaeologists forgive me for saying that. I know some of them agree with me and are aware of the political dimension of archaeology.

I am fully aware that diffusion models work slightly differently and don’t produce tokens but conceptually they are still doing statistical analysis, which is the important part.

I do, as a PhD in informatics. A carefully balanced level of surprisal is key to a good groove.

What most people don’t know is that I made a living as a media artist for a few years. I’ve met and talked to hundreds of fellow artists back in those days.

ChatGPT is nothing by itself and can be anything depending on how it’s used. But even that will never turn it into an artist much like any other machine can only be an artwork but not an artist, even if it is autonomous, which has been done in media art a lot.

Between the replication crisis and automated state propaganda there are very few places left though. It’s maybe easier for natural sciences but the humanities are more a permanent tug of war than a place for absolute truths and I wouldn’t want it any other way. Organised religion is the only place that comfortably lays out absolute truths (and then no one absolutely believes them or always follows their rules because gladly people are people): Nothing is an absolute reality, all is permitted.